Everything You Need to Know About Amazon S3 Storage Options and Lifecycle Management

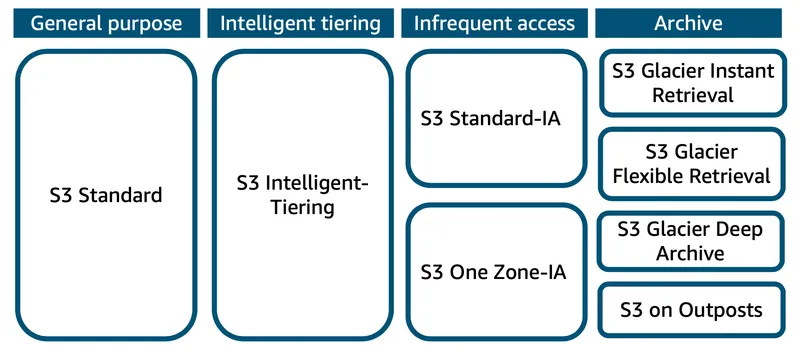

Choosing the best storage option and saving money on your AWS bill can be quite challenging, especially when you’re unfamiliar with the different Amazon S3 storage classes. In this blog, we explore the best storage options for your requirements and how S3 lifecycle policies can help manage data efficiently. AWS S3 provides various storage classes, as shown in the figure below.

Let’s dive into each storage option and lifecycle management in detail:

S3 Standard

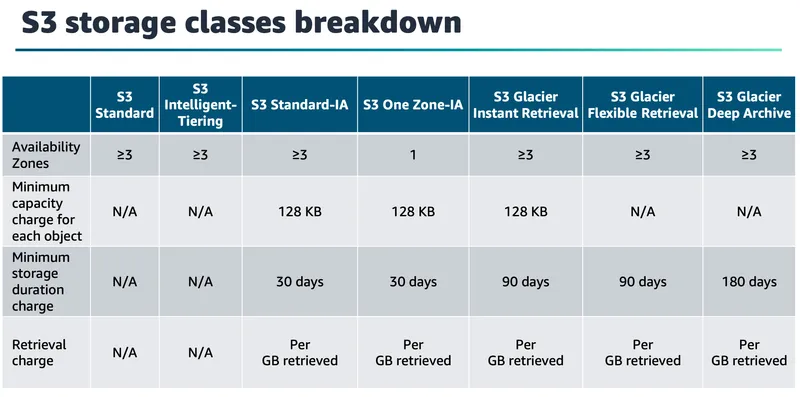

S3 Standard offers high durability, availability, and fast performance for data that is accessed frequently. It delivers low latency and high throughput, making it ideal for cloud applications, dynamic websites, content distribution, mobile and gaming apps, and big data analytics. Your data is protected with durability across at least three Availability Zones.

Use case: When you need frequent and fast data access.

Example: Imagine running an e-commerce website. Product images, customer data, and order details need to be accessed every day, all the time. Storing them in S3 Standard ensures they are always available quickly and reliably, making it the best choice for frequently used data.

S3 Intelligent-Tiering

S3 Intelligent-Tiering automatically moves data to the most cost-effective tier based on access frequency, with no performance loss or extra management. It works well for data with changing or unknown access patterns.

Use case: When data access is unpredictable.

Example: A video-sharing site where some videos are watched daily, some monthly, and others rarely. Intelligent-Tiering adjusts storage automatically to save costs while keeping data available.

S3 Standard-IA (Infrequent Access)

S3 Standard-IA offers the same durability and performance as S3 Standard but is designed for data that is accessed less often, like older images or log files. There’s a 30-day minimum storage fee, and retrieval costs are higher than S3 Standard.

Use case: When you store data that is not needed frequently but must be durable.

Example: Archiving last year’s customer reports that are rarely accessed but must be kept for reference.

S3 One Zone-IA

S3 One Zone-IA stores data in a single Availability Zone, making it a lower-cost option for data that doesn’t require high availability or resilience. Ideal for secondary backups or data that can be recreated.

Use case: When you want cost-effective storage and can afford lower availability.

Example: Keeping a backup of a recreated dataset that can be restored from another region if needed.

S3 Glacier Instant Retrieval

S3 Glacier Instant Retrieval is a low-cost archive storage class for data that is rarely accessed but needs immediate retrieval in milliseconds.

Use case: Archiving data that might need quick access.

Example: Storing medical images, news media assets, or user-generated content that must be accessed instantly when required.

S3 Glacier Flexible Retrieval

S3 Glacier Flexible Retrieval (formerly S3 Glacier) is for data that doesn’t need immediate access. Large datasets can be retrieved 1-2 times per year at low cost, usually asynchronously.

Use case: Backup, disaster recovery, or offsite storage.

Example: Keeping annual financial reports or old backup files that are rarely needed but must be preserved.

S3 Glacier Deep Archive

S3 Glacier Deep Archive is the lowest-cost storage in S3, designed for long-term retention of data that is accessed once or twice a year.

Use case: Regulatory compliance or long-term data preservation.

Example: Archiving 7-10 year old healthcare records or financial datasets that must be retained by law.

S3 on Outposts

S3 on Outposts brings S3 storage on-premises, using the same S3 APIs. It stores data locally on your Outpost, providing durability, redundancy, and local access for workloads with strict performance or residency needs.

Use case: When data must remain on-premises or close to local applications.

Example: Running an on-site enterprise application that requires fast access to large datasets stored locally.

S3 Lifecycle Policies

S3 Lifecycle Policies allow you to automate the transition of objects between S3 storage classes or delete them based on predefined rules, optimizing costs and managing data efficiently. These policies are particularly useful for managing data throughout its lifecycle, ensuring it is stored in the most cost-effective class based on access patterns.

Key Features of S3 Lifecycle Policies

- Transition Actions: Move objects to a different storage class (e.g., from S3 Standard to S3 Standard-IA or S3 Glacier) after a specified period.

- Expiration Actions: Automatically delete objects after a defined retention period.

- Flexibility: Apply rules to specific objects using prefixes, tags, or entire buckets.

- Cost Savings: Reduce storage costs by moving infrequently accessed data to lower-cost storage classes like S3 Glacier or S3 Glacier Deep Archive.

Use Case

Example: For a data analytics platform, you might store raw data in S3 Standard for real-time analysis. After 30 days, move it to S3 Standard-IA for infrequent access. After 90 days, transition it to S3 Glacier Flexible Retrieval for long-term storage, and after 7 years, move it to S3 Glacier Deep Archive for compliance. You can also set an expiration rule to delete temporary files after 60 days.

Example Lifecycle Policy Configuration

Below is a sample S3 lifecycle policy configuration in JSON format to transition objects and manage their lifecycle:

{

"Rules": [

{

"ID": "MoveToStandardIA",

"Status": "Enabled",

"Filter": {

"Prefix": "logs/"

},

"Transitions": [

{

"Days": 30,

"StorageClass": "STANDARD_IA"

},

{

"Days": 90,

"StorageClass": "GLACIER"

},

{

"Days": 365,

"StorageClass": "DEEP_ARCHIVE"

}

]

},

{

"ID": "DeleteTemporaryFiles",

"Status": "Enabled",

"Filter": {

"Prefix": "temp/"

},

"Expiration": {

"Days": 60

}

}

]

}Explanation:

- The first rule (

MoveToStandardIA) transitions objects in thelogs/prefix to S3 Standard-IA after 30 days, then to S3 Glacier Flexible Retrieval after 90 days, and finally to S3 Glacier Deep Archive after 365 days. - The second rule (

DeleteTemporaryFiles) deletes objects in thetemp/prefix after 60 days.

By leveraging S3 Lifecycle Policies, you can automate data management, reduce manual overhead, and optimize costs while ensuring data availability and compliance.